Cisco Systems has introduced a new networking chip and router designed to meet the growing demands of artificial intelligence workloads, positioning itself against rivals Nvidia and Broadcom.

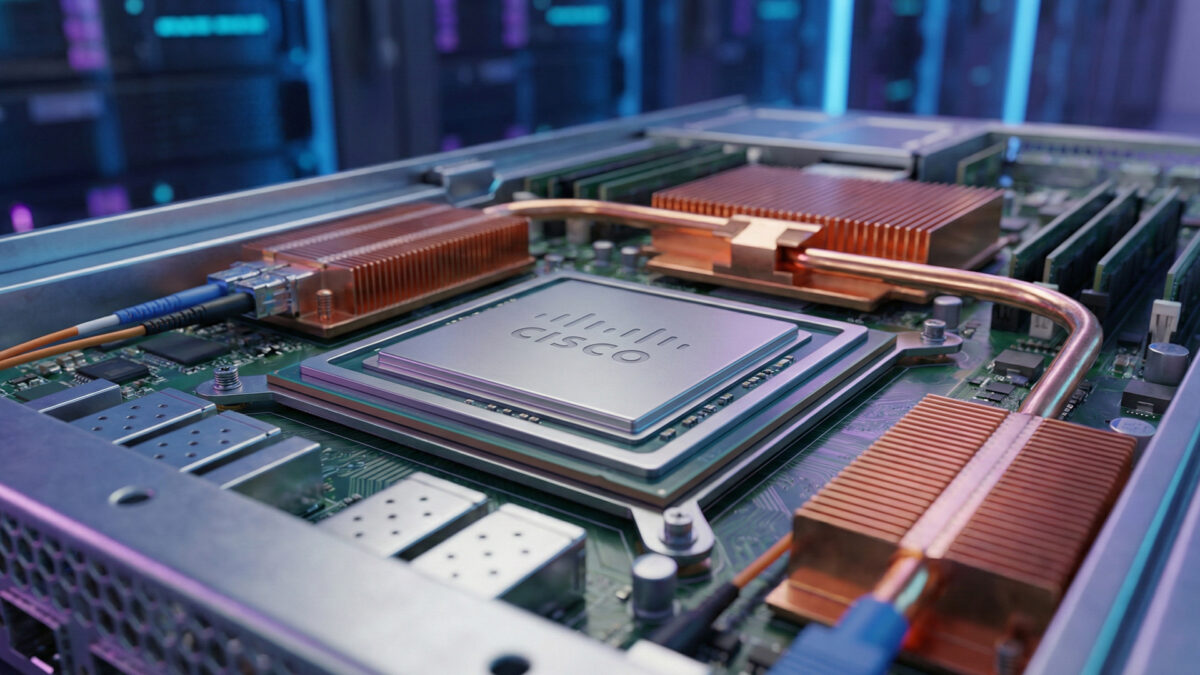

The new chip, called Silicon One G300, is a high‑capacity switch chip that helps move massive amounts of data quickly and efficiently inside AI data centres. These environments require seamless communication between thousands of AI processors, and delays can slow down training and inference tasks.

Manufactured using 3‑nanometer technology by TSMC, the G300 is expected to be commercially available in the second half of 2026. Cisco says the chip includes built-in features to handle sudden spikes in network traffic, which it calls “shock absorber” capabilities. These features can automatically reroute data to prevent bottlenecks, improving performance for some AI tasks by up to 28%.

Cisco’s move highlights the growing importance of networking technology in the AI industry. While GPUs and AI accelerators get most of the attention, efficient data movement between devices is critical for large-scale AI systems to work effectively.

The G300 competes directly with Broadcom’s Tomahawk series and Nvidia’s AI networking chips. Cisco is targeting both large cloud providers and enterprises building their own AI clusters, betting that better network performance will be a key advantage as demand for AI infrastructure grows.

It is said that AI workloads are driving a surge in demand for faster, more reliable networking solutions. By focusing on high-speed chip design and intelligent traffic management, Cisco aims to capture a share of the $600 billion AI infrastructure market.

Also Read: Eicher Motors rises to ₹7,200 after Q3 profit jump