Researchers have developed a humanoid robot that can accurately lip-sync with spoken words and sounds, marking a major advance in human-robot communication. The breakthrough comes from scientists at Columbia University, who trained the robot to move its lips and facial muscles in sync with speech using artificial intelligence rather than fixed programming.

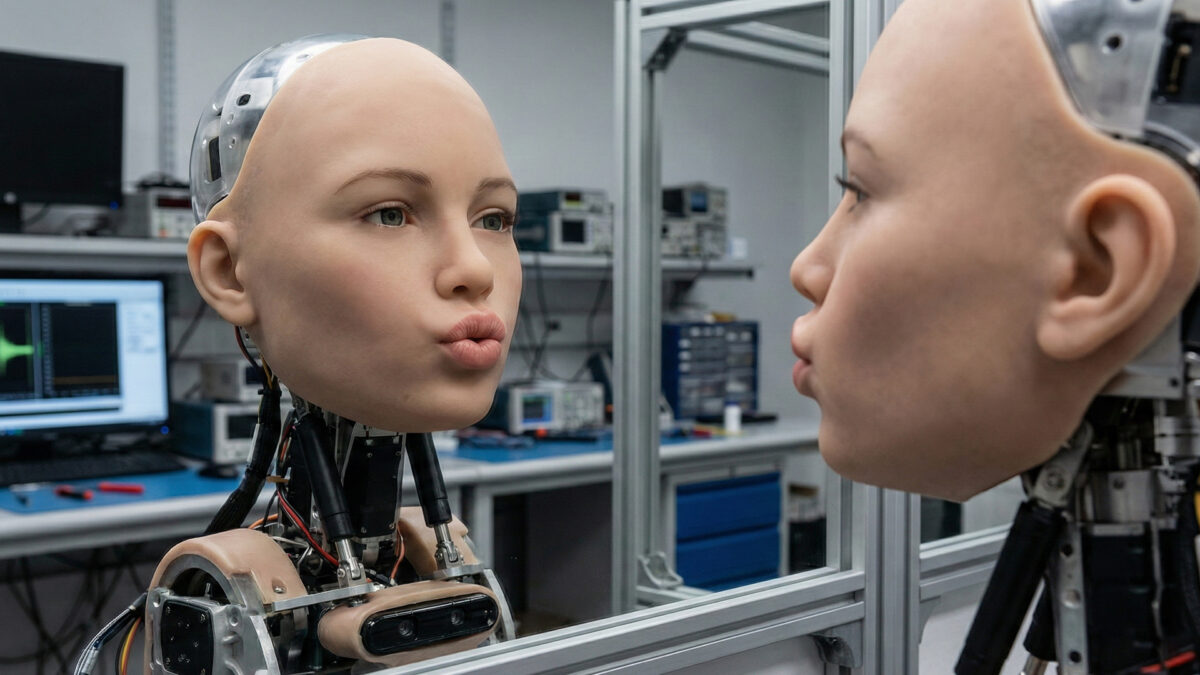

Unlike traditional robots that rely on pre-set rules for facial movement, this robot learns by observing and experimenting. It first studied its own face in a mirror, making thousands of random expressions to understand how its facial motors work. Once it learned the basics, it watched hours of videos showing people talking, allowing it to copy natural lip movements linked to specific sounds.

The robot’s face contains more than two dozen motors that control lips, jaw, cheeks, and other features. Using machine learning, the system connects audio signals directly to these motors, enabling the robot to produce realistic mouth movements for speech and even singing. The model can adapt to different languages because it learns sound-to-movement patterns instead of memorising words.

Scientists say realistic lip-syncing is crucial for making robots appear more natural and trustworthy. Poorly matched speech and facial movements often make humanoid robots seem unsettling to humans. By improving this synchronisation, the new system helps reduce that effect.

The technology could be useful in education, entertainment, healthcare, and customer service, where robots may need to speak clearly and expressively. While the robot still struggles with some complex sounds, researchers believe the work brings machines a step closer to natural, human-like interaction.